This site is protected by reCAPTCHA and the Google Privacy Policy and Terms of Service apply.

From Data to Design: Six Steps to Building Future-Ready Warehouses

By Justin Willbanks, Principal, Solution Design, Designed Conveyor Systems

Many warehouse leaders know their operation’s pain points: a bottleneck between receiving and putaway, slow pick rates, or overstuffed storage. What can be harder to pinpoint is why those issues occur or how to fix them efficiently.

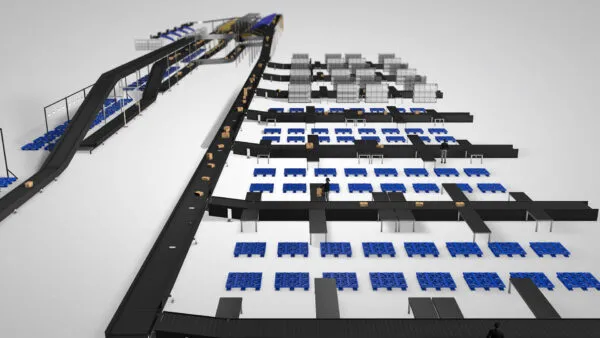

That’s where a data-driven design process makes the difference. At Designed Conveyor Systems (DCS), every facility design project — whether greenfield or a retrofit — begins with operational data, not assumptions. Validating and understanding that data gives DCS the confidence to design custom warehouse automation solutions that solve customers’ problems and support their long-term growth.

To turn that data into actionable design insight, the DCS engineering team follows a six-step process that ensures every recommendation is backed by facts. It begins with collecting and validating the right data, then progresses through forecasting future growth, interpreting the numbers to guide design, and evaluating multiple solution scenarios. The process also accounts for imperfect data, leverages advanced analytical tools, and applies lessons learned to drive continuous improvement. The goal? To create a facility that performs efficiently on day one and adapts to changing business needs for years to come.

Step 1: Collecting the Right Data

Every system design project starts with a structured data collection and validation phase. DCS first requests a defined set of data to establish a clear operational baseline, including:

- Twelve months of order history, including number of orders filled per day, number of stock keeping units (SKUs) per order, and the SKU quantity picked per order.

- Product master data to account for the type of units handled (eaches, cases, or pallets), SKU dimensions, weights, and packaging type.

- Inbound trailer volumes and number of receipts per day.

- Monthly on-hand inventory snapshots.

This information helps DCS understand both throughput and storage requirements. It paints a picture of how much product comes in, how much goes out, and how that flow translates into space, replenishment, and labor needs. These metrics form the foundation for every system design decision that follows.

Notably, very few operations have perfectly clean or complete datasets. However, that doesn’t stop progress. When data is missing, the DCS team uses averages or comparable product families to fill in the gaps. For example, if item dimensions are incomplete, representative values based on known SKUs can be applied.

This pragmatic approach allows the analysis to move forward confidently while maintaining analytical integrity. The key is transparency. The team documents every assumption so that design recommendations remain traceable and credible.

Step 2: Applying Tools to Streamline Analysis

Once the data is collected, the DCS team cleans and organizes it using SQL, PowerBI and/or Excel to help visualize key patterns. Charts and summaries reveal peaks, valleys, and order profiles that tell the story of an operation’s rhythm.

These tools help manage and analyze millions of data points, allowing DCS to uncover patterns quickly and communicate findings clearly. Emerging technologies, including artificial intelligence (AI)-enabled platforms like Alteryx, are helping us streamline data cleaning and pattern recognition even further. The goal is always the same: to make the data tell its story faster and with greater accuracy.

Step 3: Validating What the Data Really Says

Before moving into design, DCS reengages with the client to confirm everyone agrees on what the data actually represents. It’s a critical checkpoint that establishes confidence in the baseline.

This step of the data analysis process starts with a comprehensive data review presentation that walks through every key dataset, including annual throughput, order volumes, peaks and valleys, and order profiles. For example, the DCS team charts total shipments from January through December and confirms whether those trends align with what the client experiences operationally. Does the data show their true busiest and slowest periods? Does the average order size reflect what teams see on the floor?

From there, DCS breaks down order characteristics in greater detail, examining average SKUs per order, units per line, and other metrics that describe how the operation really functions. This validation process often surfaces discrepancies: a sudden volume spike tied to a one-time promotion, a missing dataset from another system, or even a data entry error. By discussing these anomalies openly, both sides can determine whether the data reflects reality or needs adjustment.

It’s a collaborative review process, typically involving multiple client stakeholders — operations managers, planners, and analysts — who know the business best. Their feedback helps confirm the dataset’s accuracy and completeness. Once everyone gives the thumbs up, the project moves forward.

Step 4: Designing for the Future, Not Just Today

After establishing the current-state baseline, DCS applies growth rates and long-term business forecasts to develop the design criteria. The goal is to create a facility that supports where the business will be five or ten years from now — not just where it is today.

That includes modeling volume growth, SKU proliferation, and potential changes in distribution network strategy. Factoring in future-state assumptions from the start prevents costly retrofits and keeps operations adaptable as the business evolves.

(To see how this forward-thinking approach plays out in practice, check out DCS’ case studies showcasing customized designs across a variety of industries.)

Step 5: Turning Data into Design Insights

Once the data has been validated and growth projections applied, the next step is to turn those insights into actionable design concepts. At this point, the team has a clear understanding of how products move through the operation — by unit of measure, by SKU velocity, and by storage type — and can begin mapping which technologies best fit each functional area of the facility.

Drawing from years of experience evaluating and implementing a wide range of custom warehouse automation solutions, DCS’ engineers start by assembling a short list of candidate technologies. Depending on the client’s operational priorities, the list might include options for inbound handling, storage and retrieval, picking, or shipping. Each recommendation is supported by data that shows why it makes sense.

Before narrowing in on a design direction, the DCS team typically hosts an education session with the client. This includes walking through the use cases for the automated warehouse picking and packing solutions under consideration, such as goods-to-person technologies, automated put walls, or robotic picking systems. The team also shares examples from other projects to bring everyone up to speed on what’s available in the market — especially if some technologies are unfamiliar. Content includes how each works, what problems it solves, and how it could apply to their operation.

After establishing that baseline understanding, alternative options are explored. For each functional area — such as picking — the design team may present two or three technology options based on throughput, storage needs, footprint, and cost. Each concept is modeled to show how it fits within the building layout, along with a rough order of magnitude (ROM) cost estimate.

Next, the options are compared side by side. For instance, one technology might require 50,000 square feet and cost $10 million, while another takes 60,000 square feet and costs $12 million. The trade-off between required footprint and capital investment is discussed. Beyond those physical and financial considerations, other assessment points include labor impact, space savings, and flexibility. A business case is built for each alternative, calculating estimated savings and projected return on investment (ROI), often over three- to five-year timeframes.

Finally, the DCS team reviews the findings with the client, walking through the data and rationale behind each scenario. ROI is always a major factor, but it’s not the only one. Sometimes flexibility, scalability, or operational simplicity outweighs a slightly faster payback. Through this collaborative review, both teams can adjust assumptions, refine estimates, and ultimately align on a final recommendation — one that balances performance, cost, and long-term adaptability.

Step 6: Fine-Tuning Post-Evaluation

Data-driven design doesn’t stop once the preliminary facility concept is approved. The same analytical principles apply when fine-tuning the final design. Techniques like batch picking analysis, for example, can identify opportunities to consolidate orders, reduce travel, and improve labor efficiency.

These often-overlooked opportunities to optimize the solution can cause increases in the size, complexity, and overall cost of the system. Data offers the visibility to make those adjustments intelligently.

From Uncertainty to Clarity

When warehouse design decisions are grounded in operational data, businesses gain confidence that every investment supports long-term performance. The right data — validated, interpreted, and applied — transforms warehouse design from guesswork into precision.

At DCS, we believe data-driven design is the key to building smarter, more scalable operations that adapt as business needs evolve.

Ready to see what your data reveals?

Learn how DCS’ data-driven design process can help you build a more efficient, future-ready operation that incorporates automated warehouse picking and packing solutions. Connect with us to start your data validation review.